This is why all the PG were processed within a 4 days period even with a 1 week interval. This means in other words, statistically that >50% of PG will be process twice a week. Assuming the deep-scrubbing allocation is made once a day, it means any of the PG will be selected by the random procedure on every week. Osd_deep_scrub_randomize_ratio – this is a random ratio to select a PG that do not need to be selected for deep-scrubbing to be part of the deep-scrubbing batch. Scrubber.time_for_deep = (scrubber.time_for_deep || deep_coin_flip) īasically deep-scrubbing is also dependent of a fourth parameter even if the documentation is not really clear on it: If (!scrubber.time_for_deep & allow_scrub)ĭeep_coin_flip = (rand() % 100) _conf->osd_deep_scrub_randomize_ratio * 100 we will deep scrub because this function is called often. If we randomize when !allow_scrub & allow_deep_scrub, then it guarantees The explanation of this over processing has been found in the CEPH code: scrubber.time_for_deep = ceph_clock_now() >= This assumption is partially true but also wrong.

Ceph client for mac windows#

As a consequence, if we were looking to extend the period or the time windows we would not have solution here if the PG are process more often than the expected period. One week period seems a really conservative setting.Īfter some investigation, it appears than even if the interval was 1 week, we had no PG deep-scrubbed before the 4 previous days. Basically the level of parallel deep-scrubbing is related to this parameter and the time windows. It means, when the deep scrubbing schedules its work, it marks all the PG not deep scrubbed since this interval to be in this batch. Osd deep scrub interval – this parameter defines the period for executing a deep scrubbing on a PG. For batch or international workload a global time windows (from 0am to 24pm) looks more appropriate. Having a nightly processing make sens if the supported workload is regional and human based transactions. It’s important to correctly set the scrubbing time-windows regarding the server activity. This is why the I/O level decreased after 7am but is still high until 8m.

The deep scrubbing takes time and if the last deep-scrubbing starts before 7am, the end of the processing can ba about 1 hour later. In my exemple they are planned between 0am and 7am. Osd scrub begin/end hour – these parameters define the scrubbing time windows. To understand this, let see the configuration I had. The I/O performance shown in the above metric history was caused by the deep scrubbing process running on daily basis from 0:00am to 7:00am.

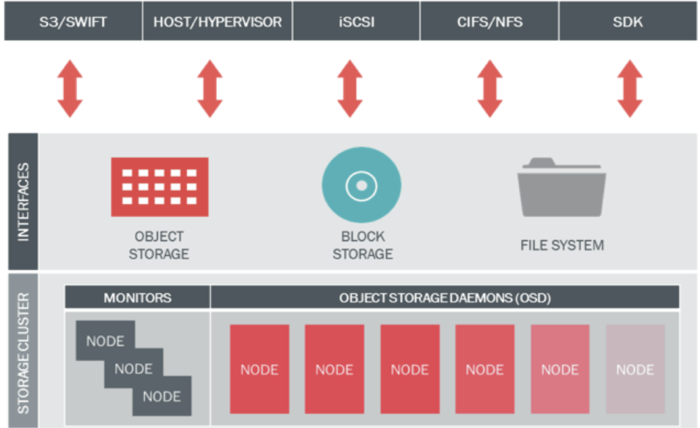

The scrubbing process is usually execute on daily basis. CEPH scrubbingĬEPH is using two type of scrubbing processing to check storage health.

In this post I’ll explain why I had a such situation on the CEPH infrastructure and what are the settings to modify this I/O level. This I/O saturation is impacting the application performance on OpenStack even if the system was really resilient to this activity level. Recently I’ve been facing an I/O congestion during night period. Ceph is a distributed storage system use in Cloud environment.

0 kommentar(er)

0 kommentar(er)